Web Search Engine Based Semantic Similarity Measure, Research Paper Example

Abstract

Semantic similarity approach is a good way of solving search problems many users face when retrieving information from the internet. This approach uses the information retrieved from the search results and smartly organizes related information based on the meaning of the content. This enables the user to get meaningful information by avoiding reading duplicated web pages that are redundant and time-consuming. This approach undergoes or utilizes aspects of crawling, ranking, indexing, and retrieval that ensure that there is efficiency in the search results. The approach depends on the ranking, indexing and crawling algorithms that will drive the engine of semantic search engines. The building blocks of semantic similarity measure integrate algorithms that are able to compare words extracted from various databases based on their meanings and perform language processing in order to identify how similar they are and organize them intelligently for the user. This approach yields impressive results in terms of efficiency.

Web search engine based semantic similarity measure

The internet is the biggest resource center abundant with information from every area of study, research, learning, and life in general. The internet since its invention has provided an invaluable platform for acquiring information. With time, it has grown bigger and more essential to the daily lives of humans as a source of information. This trend is on the rise and the internet will continue to grow.

The biggest challenge with the internet, however, is to make meaning use of it by extracting information relevant to the user’s needs. Search engines are used to extract content from web pages but many a time users find results that they were not looking for or a bunch of content that is duplicated and end up saying similar things. This creates a challenge for most users hence the need for a solution that will ensure users get the information they want and in the variety that is useful in the diversity of research and depth of information.

Web mining involves extracting meaningful information from the internet using various data mining techniques and approaches. This research paper aims at focusing on semantic similarity as one of the several solutions that can be used to solve the problem of poor search results of search engines.

Related Work

Data mining has faced the challenge of the existence of commonalities when it comes to searching using keywords. When a user searches for particular information, there is a possibility of getting irrelevant or duplicated information due to a large amount of content available and the inefficiency of most search engines. (Sahami et al., 2016) Sahami et al. (2016) Suggest a new way in which little content can undergo similarity checks by assigning the search results a single parameter. This approach aims at improving the efficiency of semantic similarity.

Chen et al. (2006) Proposes the content on the web should be double-checked in order to have better control of the results of a web search. This suggested approach looks to deviate from the traditional techniques that use counting and grouping of web pages. (Chen et al., 2006)

Cilibrasi et al. (2007) brought forth a new outlook on similarity by suggesting the contextual meaning of words. This approach focuses on similarity as dependent on distance and diversity of usage. The technique is relevant to all search engines and databases. Some ideas in support of the methodology are presented to the creators: Kolmogorov’s multi-form nature, data distance and pressure-based similarity metrics, detailed representation of Google’s ownership and Google Standardized Distance (NGD) (Cilibrasi et al., 2007).

Lin et al. (1998) recommended that the initialization of semantics from the content be one of the best difficulties of classical dialect learning. They characterized a measure of similarity of words based on the word distribution model (Lin et al., 1998). Pei et al. (2004) proposed the projection-based method, based on model growth, to efficiently exploit successive models (Pei et al., 2004).

Resnik et al. (1995) present a measure of semantic similarity in scientific classification, due to the thought of the content of the data (Resnik et al., 1995). Bollegala et al. (2011) proposed a technique that abuses the number of pages and snippets of content returned by the search engine of the web (Bollegala et al., 2011). Ming Li et al. (2004) suggested a measure that depends on the untreatable idea of ??the measurable distance of Kolmogorov and considered it as the similarity metric. The general numerical assumption of similarity which does not use any necessary learning nor does it explicitly highlight a territory of application (Ming Li et al., 2004).

Gledson et al. (2008) describe a page-dependent web similarity technique with the ability to factor in the arrangement of all words in order to determine the semantic similarity; distributed web-based distribution that uses web search accounts and seeks to establish similarity within groups of words.

These reviews of the literature have demonstrated how semantic similarity measures play a critical role in relationship extraction, data extraction, community extraction, automatic metadata extraction, and document clustering. It is, therefore, necessary to have a more and more powerful framework to discover the semantic similarity among words.

Proposed Work

This paper will approach semantic similarity by using two keywords A and B, as a method of developing semantics of capacity (A, B) benefiting from an incentive 0 and 1. In the case where A and B are incredibly similar, say the words are related, we expect the Semantic Similarity Incentive to look more like 1; generally, the Semantic Similarity Incentive looks more like 0. We characterize various strengths expressing the similarity between A and B using the number of pages and extracts retrieved from the search engine of the web for both words.

While making use of the words, a two-class Support Vector Machine is utilized in order to create a separation between sets of non-synonymous and synonymous words” (Ming Li et al., 2004). This paper has several objectives that include:

- To establish the semantic similarity of two keywords and their correlation.

- To ameliorate the accuracy, recall and the F measurement of the proposed framework.

Findings

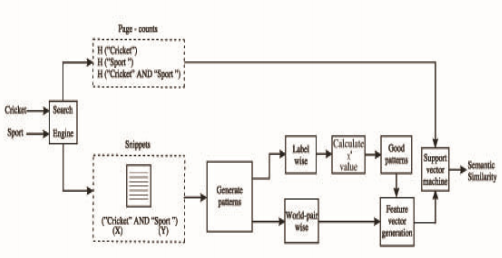

The figure below shows the schema of the suggested approach used to search for semantic similarity using the search results of a given search engine.

Figure 1

When the q query is submitted on the web search engine, which are short outlines of the results of the search, are returned to the client. For starters, we must question the correspondence of words in a search engine of the web; for instance, we will query “sport” and “cricket” in the search engine of Google. We get pages of the word match next to the page counts for personal words, for example, H (sport), H (cricket), H (sport AND cricket). The page counts are utilized in discovering co-event metrics and the qualities are stored for future use. The excerpts from the results of the search engine are then collected. The quotations are obtained only for questions X and Y. We mainly collect the two citations and the number of pages for sets of 200 words.

The chi-square technique is put into use to identify the significant models of the top two-hundred models of enthusiasm using the frequencies of the model. After that, we incorporate these 200 main models into co-occurrence measures processed. Randomly that the pattern occurs in the arrangement of sound designs, select the correct mode with the recurrence of the event in the word matching patterns. Otherwise, we set the repeat to 0. Therefore, we get a vector of the element with 204 qualities, for example, the best 200 models and four measures of co-event estimates.

We utilize an SMO (Sequential Minimum Optimization) SVM to identify the ideal combination of similarity scores according to the number of pages and better positioning models. SVM is ready to group synonymous and non-synonymous word sets. We select the synonymous and non-synonymous word combinations and convert SVM yield to a backward likelihood. We characterize semantic similarity of the two words as the backward probability that they have a place in the class of synonymous (positive) words (Bollegala et al., 2011).

Algorithm for Findings

The proposed model algorithm retrieval is utilized to determine the semantic similarity of the two words A and B. In table 1, we use the keywords to query the search engine and download extracts. Our survey of master cards retrieves excerpts where A and B appear in a seven words window. A typical search engine crash has twenty words and incorporates two selected scripts in a report; we expect the 7-word window to be sufficient in covering most of the relationships between the two words in snippets. It is clear that the algorithm reflects on the industry standards for recovering models and model recurrence (Hughes et al., 2007).

The model recovery algorithm described above gives various extraordinary models. Of these trends, only 80% occur less than ten times. Preparing a classifier with such different analysis models is difficult. We should measure the certainty of each model as a possible synonymy marker: a large part of the models has a recurrence below ten; it is, therefore, difficult to discover the models that are critical along these lines, we must register their certainty to touch the base to large models. We record chi-square as an incentive to discover the confidence of each model (Sahami et al., 2016).

The use of the formula calculates the value of the chi-square:

Where P and N are the non-synonymous and synonymous pair of words patterns total frequencies, PV and NV are the model frequencies v which are retrieved from non-synonymous and synonymous word pairs.

Experimental Results

Page-count-based on the Co-occurrence Measures

Four known co-occurrence measures were computed the Point Wise Mutual Information (PMI), Dice, Overlap (Simpson), and Jaccard for calculating the semantic similarity with the use of page counts.

We updated this to Java programming dialect and utilized the Eclipse as the open source IDE. The query for BAND A and collect five-hundred snippets for each combined word, and each word match (A, B) stores them in the database.

Using the model recovery algorithm, we have recovered some gigantic models and selected only the top 200 models. After that, we think of each of the 200 main models dependent on the qualities of the chi-square, called models with the models created by the given correspondence of words. “If the separate reason for the specific word combination is one of the best intentions, save it with a new identification and record the recurrence of that pattern that is primarily created by certain predetermined word match” (Gledson et al., 2008). “Failure of a model to coordinate, store a new ID with its proceeding recurrence set to 0, then it is stored in the table.”

A similar table includes the “4-Web-Overlaps, Web-Jaccard, the Web-Dice, and the Web-PMI, that gives a table with 204 columns of interesting ID, recurrence, and word matching. Then normalize the estimates of the repetition by dividing the incentive in each tuple by the totality of all the forecasts of the repetition. Currently, this measurement vector 204 is known as a component vector of a given word match. Convert the vector elements of all word sets into Comma Separated Values ??(CSV) document. The created.CSV document is reinforced by the SVM classifier integrated into the Weka programming.” This characterizes the qualities and gives similarity of a score of word combines in the middle of 0 and 1.

Test data

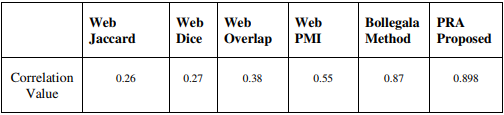

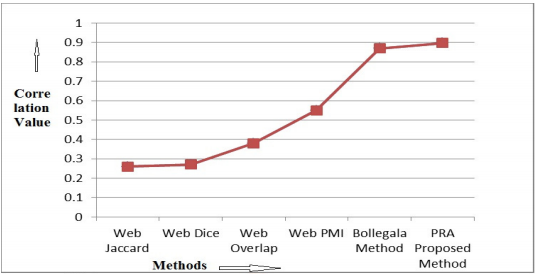

Testing our framework, we chose the Miller-Charles standard dataset, which includes 28-word games. The algorithm proposed exceeds 89.8 %correlation estimates, as shown in the table below.

Table 2. The Comparison of the Association value of the PRA with the existing methods

Figure 2: Comparison of the correlation value of the PRA with the current approaches.

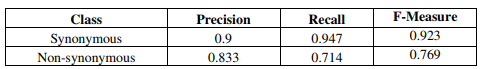

An ideal algorithm for a search engine should be able to retrieve information for a given query by the user. Successful or desired results lie in two key aspects; precision and recall. Accuracy is the portion of retrieved opportunities that are significant, whereas Recall is the part of the prominent examples that are extracted. Both the recall and precision depend on comprehending and measurement of importance. In simplicity, a high Recall implies that the most important part of the desired results is obtained by the algorithm. A high precision suggests an algorithm returned results that were more significant than immaterial.”

Table 2. The precision, F-measure and Recall values for both the non-Synonymous and the same classes.

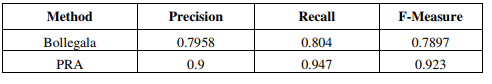

In this research document, the measurement F is recorded according to the measurements of the assessment of the precision and the recall. The outcomes are better than previous algorithms. Table 3 reveal the examination of the improvement of the accuracy, recall and measurement F of proposed algorithm (Sahami et al., 2016).

Table 3. The comparison of F-measure, recall and precision values of the PRA with the previous method

Conclusion

In summary, semantic similarity measures between words assume essential work data extraction, regular dialect processing, and different web-based businesses. The research paper proposed a model extraction algorithm to remove various semantic relationships existing between the four words, and the two-word co-event measures were recorded to use the number of pages. We incorporate co-event patterns and rules to create an element vector.

References

Ann Gledson & John Keane, (2008) “Using Web-Search Results to Measure Word-Group Similarity,” 22nd International Conference on Computational Linguistics), pp. 281-28.

Chen H, Lin M & Wei Y, (2006) “Novel Association Measures using Web Search with Double Checking,” International Committee on Computational Linguistics and the Association for Computational Linguistics, pp. 1009-1016.

Cilibrasi R & Vitanyi P, (2007) “The Google similarity distance,” IEEE Transactions on Knowledge and Data Engineering, Vol. 19, No. 3, pp. 370-383.

Danushka Bollegala, Yutaka Matsuo & Mitsuru Ishizuka, (2011) “A Web Search Engine-based Approach to Measure Semantic Similarity between Words,” IEEE Transactions on Knowledge and Data Engineering, Vol. 23, No.7, pp.977-990

Hughes T & Ramage D (2007) “Lexical Semantic Relatedness with Random Graph Walks,” Conference on Empirical Methods in Natural Language Process

Jay J Jiang & David W Conrath, “Semantic Similarity Based on Corpus Statistics and Lexical Taxonomy,” International Conference Research on Computational Linguistics

Lin D, (1998) “Automatic Retrieval and Clustering of Similar Words,” International Committee on Computational Linguistics and the Association for Computational Linguistics, pp. 768-774

Ming Li, Xin Chen, Xin Li, Bin Ma, Paul M & B Vitnyi, (2004) “The Similarity Metric,” IEEE Transactions on Information Theory, Vol. 50, No. 12, pp. 3250-3264.

Pei J, Han J, Mortazavi-Asi B, Wang J, Pinto H, Chen Q, Dayal U & Hsu M, (2004) “Mining Sequential Patterns by Pattern growth: The Prefix Span Approach,” IEEE Transactions on Knowledge and Data Engineering, Vol. 16, No. 11, pp. 1424-1440.

Resnik P, (1995) “Using Information Content to Evaluate Semantic Similarity in a Taxonomy,” 14th International Joint Conference on Artificial Intelligence, Vol. 1, pp. 24-26

Resnik P, (1999) “Semantic Similarity in a Taxonomy: An Information-based Measure and its Application to problems of Ambiguity in Natural Language,” Journal of Artificial Intelligence Research, Vol. 11, pp. 95-130.

Sahami M. & Heilman T, (2006) “A Web-based Kernel Function for Measuring the Similarity of Short Text Snippets,” 15th International Conference on World Wide Web, pp. 377-386

Time is precious

don’t waste it!

Plagiarism-free

guarantee

Privacy

guarantee

Secure

checkout

Money back

guarantee